In the rapidly evolving field of deep learning, innovative neural network architectures are constantly emerging. Keeping pace with these developments necessitates the study of these case studies. This blog is based on the content from the second week of the fourth course in Professor Andrew Ng's deep learning specialization, focusing on some case studies of convolutional neural networks. Significance of Case Studies Firstly, consider why we need to study these cases. These case studies embody the knowledge and experience accumulated by predecessors in network design. By studying these cases, we can intuitively understand successful design concepts and gain a deeper understanding of how to design network structures. Secondly, excellent network architectures often possess transferability. The structure of AlexNet, which solved the ImageNet classification problem, can also be applied to other visual tasks. Learning these architectures can help us design general models. Thirdly, reading papers is a way to improve oneself. Understanding the development of cutting-edge technologies helps broaden our horizons and enhances our analytical and learning abilities. Even if one is not engaged in visual…

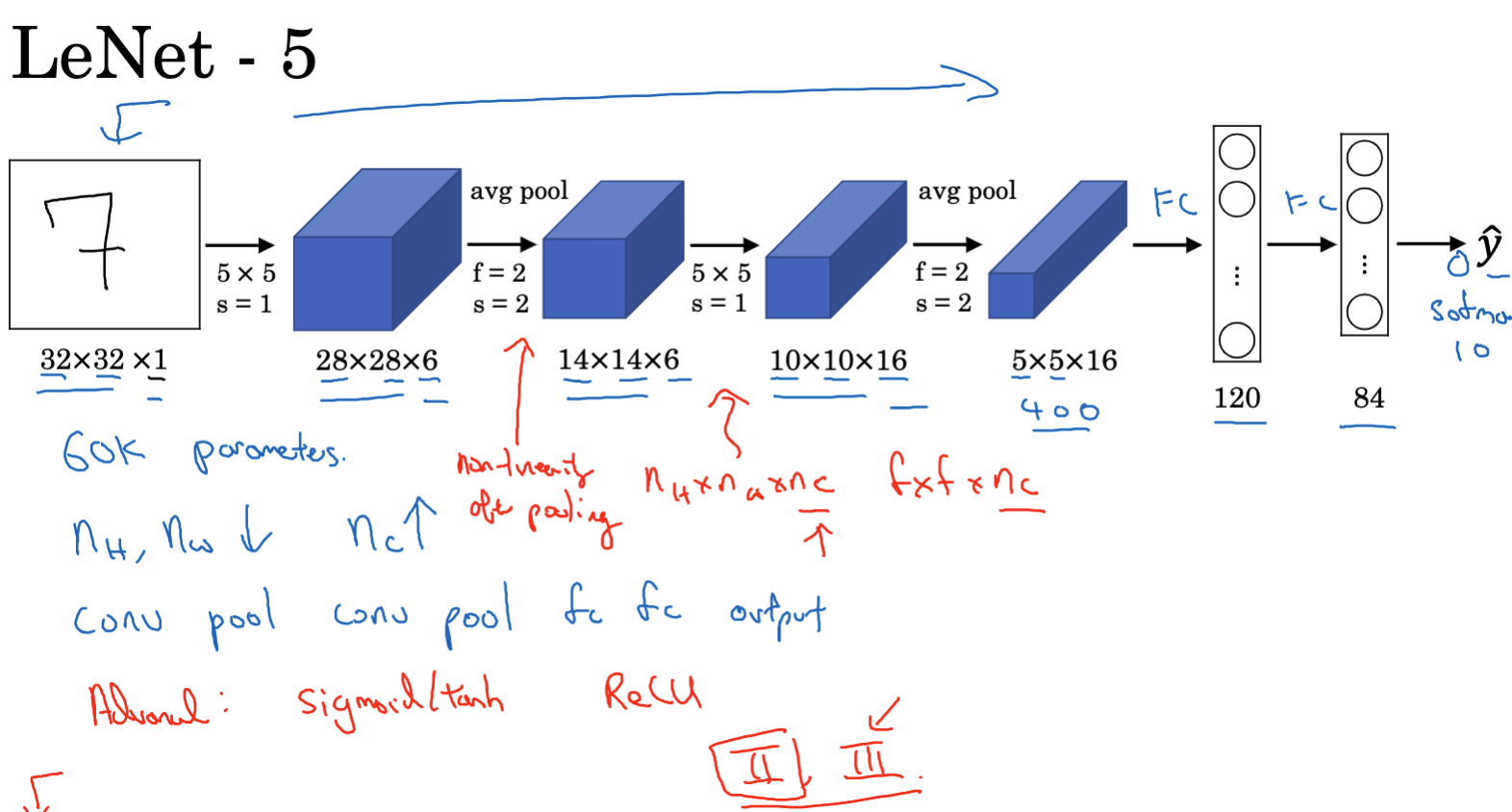

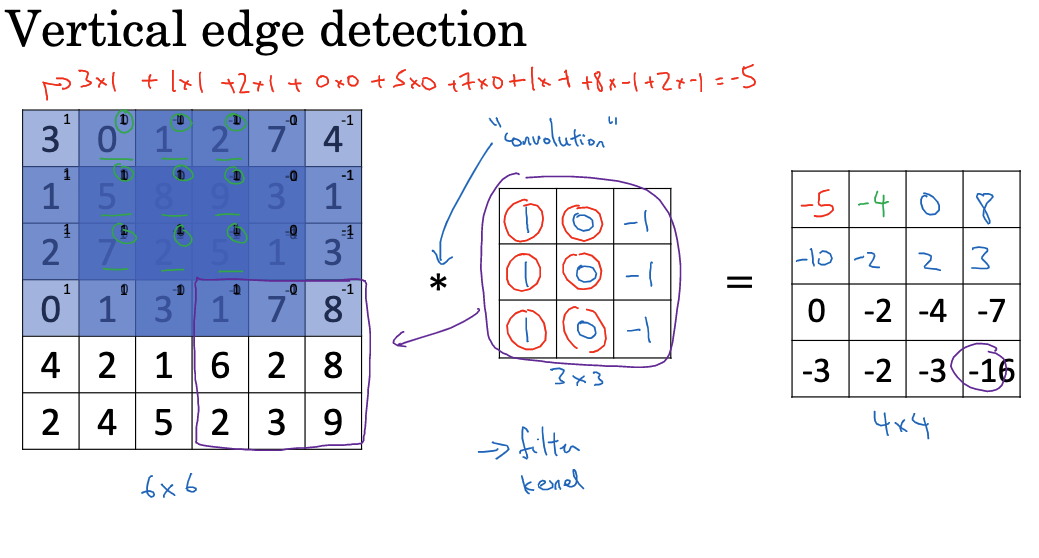

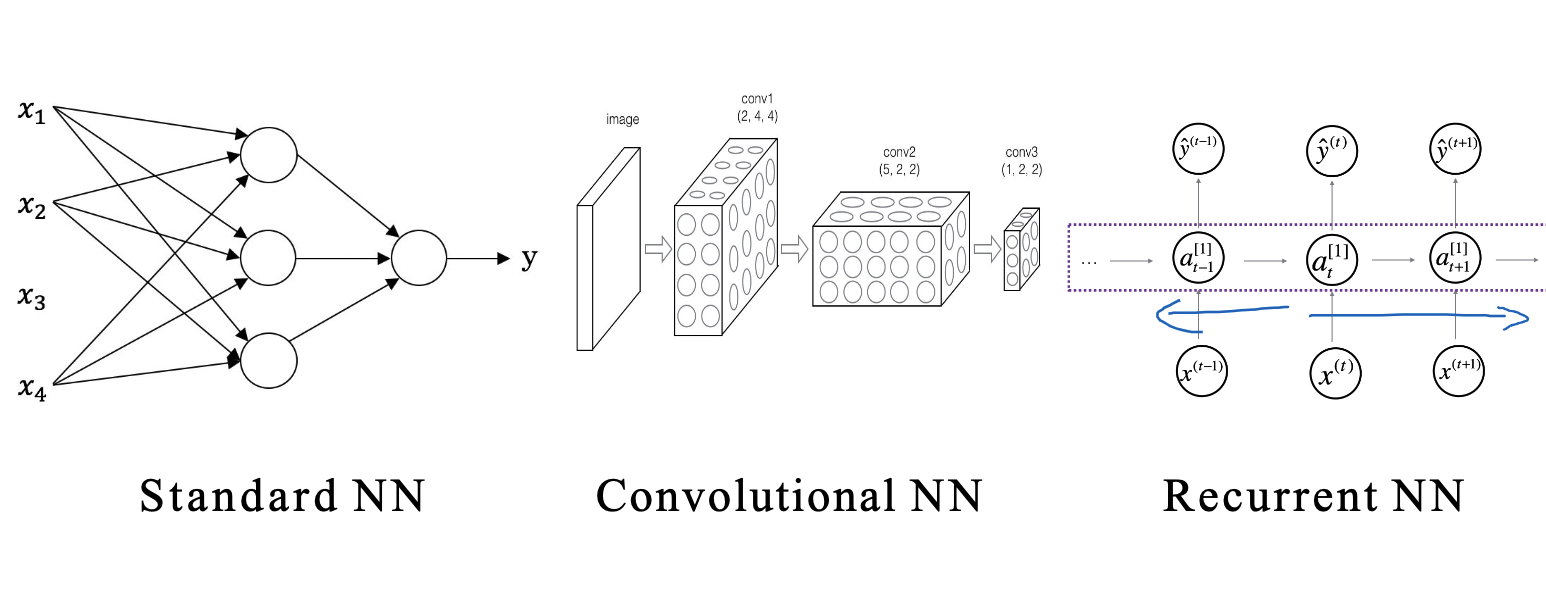

Convolutional Neural Networks (CNNs) are a type of deep neural network designed for image processing. Inspired by the structure of biological visual systems, CNNs utilize convolution operations to extract spatial features from images and combine these with fully connected layers for classification or prediction tasks. The integration of convolution operations allows CNNs to excel in image processing, making them widely applicable in tasks such as image classification, object detection, and semantic segmentation. This blog will provide a brief introduction to the basics of convolutional neural networks, based on the first week of Professor Andrew Ng's deep learning specialization, course four. Starting with Computer Vision Computer vision is a rapidly developing field within deep learning. It is used in various applications such as enabling autonomous vehicles to recognize surrounding vehicles and pedestrians, facial recognition, and displaying different types of images to users, like those of food, hotels, and landscapes. Convolutional neural networks are extensively used in image processing for tasks like image classification, object detection, and neural style transfer. Image classification involves identifying the content within…

Machine learning is a key driver of technological advancement today. Establishing a systematic machine learning strategy is essential for efficiently advancing projects and achieving desired outcomes. This requires careful consideration of several critical steps, including goal setting, model selection, data processing, and results evaluation. In this blog, we will explore these steps in detail. We will particularly focus on effective strategies and methods for setting machine learning goals, evaluating model performance, and optimizing models. By the end of this blog, you should have a deeper understanding of the machine learning project lifecycle and be able to apply these methods to enhance your project's performance. This blog also covers content from the third course in the deep learning specialization by Andrew Ng. Given the brevity of this course, we will summarize it in a single post. Let's dive in! 1. Orthogonalization: Streamlining Goals and Means Orthogonalization is a crucial concept in machine learning strategies. It involves optimizing different aspects of a machine learning system by ensuring each aspect focuses on a specific goal independently. Consider the…

The primary focus of this blog is on hyperparameter tuning, batch normalization, and common deep learning frameworks. This is also the final week of the second course in the specialized deep learning curriculum. Let's dive in! Hyperparameter Tuning Hyperparameter tuning is a crucial process in deep learning. Properly setting hyperparameters will directly impact the performance of deep learning models. This section will explore the significance of hyperparameter tuning, the key hyperparameters that affect model performance, and methods and strategies for selecting these hyperparameters. The Importance of Hyperparameter Tuning Hyperparameter tuning has a decisive effect on the performance of deep learning models. Properly set hyperparameters can significantly enhance model training efficiency, generalization ability, and testing results. Conversely, improper hyperparameter choices can lead to slow training, ineffective learning, overfitting, and other problems. The importance of hyperparameter tuning is reflected in the following aspects: Hyperparameters directly influence the model's complexity, thus affecting its learning capability. A model with appropriate complexity can fully learn the data patterns while avoiding overfitting. Different hyperparameters can affect the speed of model training.…

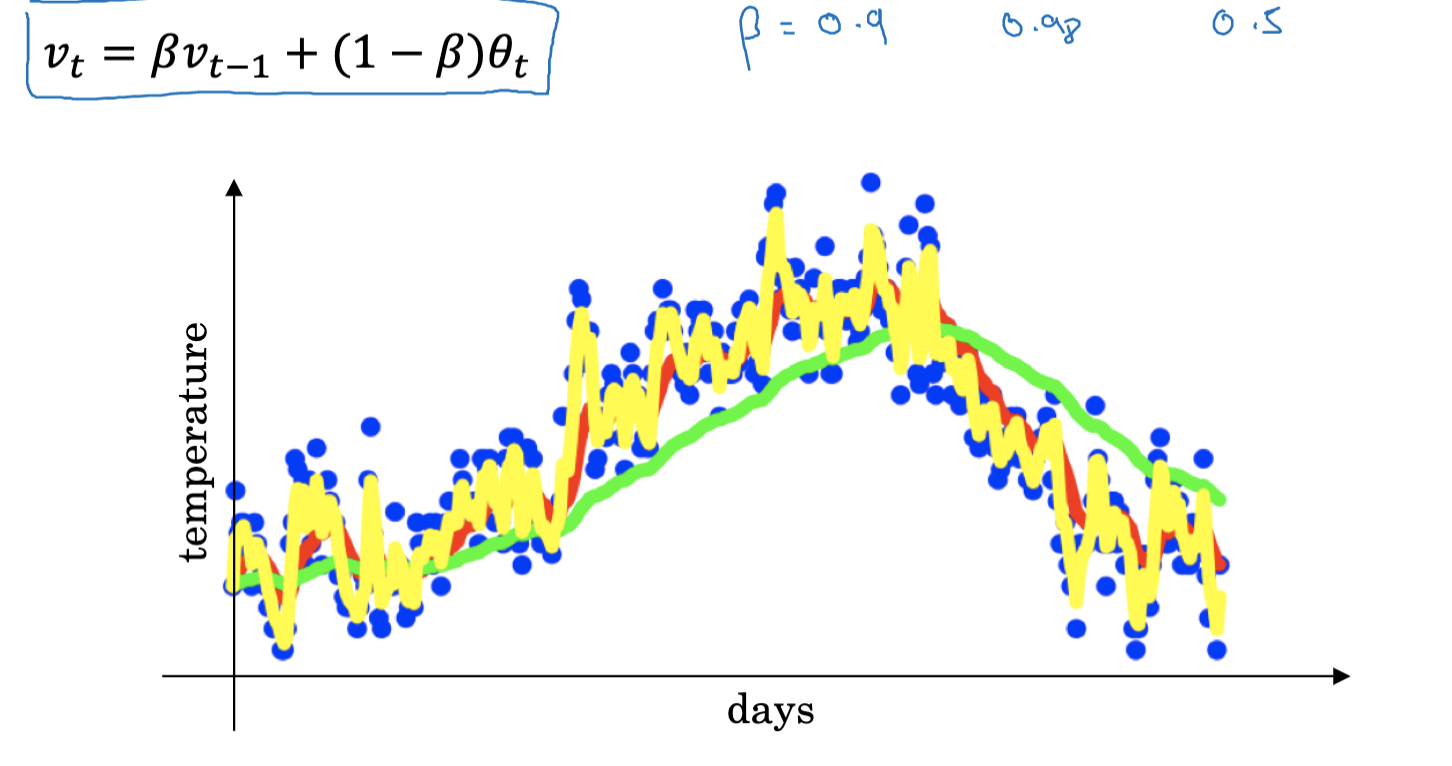

This week's content focuses on optimization algorithms, which can significantly enhance and expedite the training of deep learning models. Let's dive in! 1. Importance of Optimization Algorithms Optimization algorithms are crucial in the fields of machine learning and deep learning, particularly when training deep neural networks. These algorithms are methods used to minimize (or maximize) functions, typically the loss function in deep learning, with the goal of finding the optimal parameters that minimize this function. Why Optimization Algorithms are Necessary In deep learning, the aim is to achieve the best possible performance on the training data while preventing overfitting, ensuring the model also performs well on new, unseen data. This requires balancing between overfitting and underfitting. Optimization algorithms are the tools that help us find this balance. Common Types of Optimization Algorithms Batch Gradient Descent: Uses all training samples to perform gradient descent in each iteration. Stochastic Gradient Descent: Uses one training sample to perform gradient descent in each iteration. Mini-batch Gradient Descent: Uses a subset of training samples to perform gradient descent in each…

In the journey of learning deep learning, we encounter extensive theoretical knowledge, including gradient descent, backpropagation, and loss functions. A true understanding and application of these theories allow us to solve practical problems with ease. This blog, drawing from Week 1 of Course 2 in Professor Andrew Ng's Deep Learning Specialization, explores critical concepts and methods from a practical standpoint. Key topics include how to divide training, development, and test sets, understanding and managing bias and variance, when and how to use regularization, and properly setting up optimization problems. 1. Dataset Division: Training Set, Validation Set, and Test Set When training a deep learning model, a training set is required to train the model, a development set (or validation set) is used to tune the model’s parameters, and a test set is used to evaluate the model’s performance. The training set is the dataset used to train the model, where the model learns from this data and tries to identify its underlying patterns. The validation set is used during training to ensure the model is…

The explosive popularity of ChatGPT and the recent flurry of large-scale model developments have thrust the field of artificial intelligence into the spotlight. As a tech enthusiast keen on exploring various technologies, understanding the principles behind these advancements is a natural inclination. Starting with deep learning is a logical step in delving deeper into AI, especially since I've already studied Professor Andrew Ng's Machine Learning course. Now, through his Deep Learning Specialization, I am furthering my knowledge in this domain. This article aims to demystify deep learning, drawing insights from the first course of the specialization. 1. Neural Networks: An Overview and Fundamental Concepts Neural networks are algorithmic models that mimic the human brain's neural network for distributed and parallel information processing. Comprising a vast array of interconnected nodes (or neurons), each neuron processes incoming signals with simple signal processing functions and transmits the outcomes to subsequent neurons. The objective is to minimize the predictive error of the network by continually adjusting the network's parameters, namely the connection weights between neurons. Below is an illustration…