In the journey of learning deep learning, we encounter extensive theoretical knowledge, including gradient descent, backpropagation, and loss functions. A true understanding and application of these theories allow us to solve practical problems with ease. This blog, drawing from Week 1 of Course 2 in Professor Andrew Ng’s Deep Learning Specialization, explores critical concepts and methods from a practical standpoint. Key topics include how to divide training, development, and test sets, understanding and managing bias and variance, when and how to use regularization, and properly setting up optimization problems.

1. Dataset Division: Training Set, Validation Set, and Test Set

When training a deep learning model, a training set is required to train the model, a development set (or validation set) is used to tune the model’s parameters, and a test set is used to evaluate the model’s performance.

The training set is the dataset used to train the model, where the model learns from this data and tries to identify its underlying patterns. The validation set is used during training to ensure the model is learning correctly. This dataset helps in tuning the model’s hyperparameters and selecting the best model architecture. The test set is used to confirm the model’s ability to handle unseen data. After development, we evaluate the model on the test set to assess its generalization ability. A common split ratio is 60%/20%/20%. However, for larger datasets, such as those exceeding a million samples, a 98%/1%/1% split can be used. Additionally, if project requirements allow, a setup with only a training set and validation set without a test set is also acceptable.

2. Understanding Bias and Variance

In statistics and machine learning, bias and variance are two critical concepts.

- Bias: Bias refers to the difference between the predicted values and the actual values. High bias usually means the model is too simple, which is also known as underfitting, failing to capture all the patterns in the data. For example, if our training set error is 15% and the development set error is 16%, this might indicate that our model has high bias.

- Variance: Variance is the model’s sensitivity to the training set. If the variance is too high, the model is likely too complex, which is also known as overfitting, overreacting to minor changes in the training set. For instance, if our training set error is 1% and the development set error is 11%, this might indicate that our model has high variance.

When our training set error is 15% and the development set error is 30%, this indicates that our model has both high bias and high variance. Conversely, if our training set error is 0.1% and the development set error is 1%, it suggests that both bias and variance are well controlled. It is worth noting that if we use the Bayesian error rate, assuming a human error rate of 15%, a model with a training set error of 15% and a development set error of 16% is actually quite good.

3. Basic Strategies in Machine Learning

In machine learning, we can balance and control bias and variance through several basic strategies.

- Diagnosing Bias and Variance: We can diagnose bias and variance by observing the model’s error on the training and development sets to determine if there are issues with high bias or high variance.

- Addressing High Bias: If the model performs poorly on the training set, it suggests high bias. We can address high bias by adding more hidden layers or units, extending training duration, using more advanced optimization algorithms, or finding a more suitable neural network architecture.

- Addressing High Variance: If the model performs poorly on the development set, it suggests high variance. We can address high variance by acquiring more training data, using regularization methods to reduce overfitting, or finding a more suitable neural network architecture.

- Bias-Variance Tradeoff: In deep learning, it is often possible to reduce both bias and variance simultaneously. Increasing the network size and acquiring more data usually reduces bias without raising variance; similarly, acquiring more data generally reduces variance without increasing bias.

- Regularization: Regularization is an effective method to reduce overfitting, i.e., high variance. In some cases, regularization may slightly increase bias, but if the network size is large enough, this increase is usually minimal.

4. Regularization

When training deep learning models, we often encounter issues of underfitting and overfitting. Underfitting occurs when the model is too simple to capture all the patterns in the data, while overfitting happens when the model is too complex and learns the noise and specifics of the training data too well, resulting in poor performance on unseen test data. To mitigate these issues, we can use a technique called regularization.

4.1 What is Regularization

Regularization is a technique used to reduce overfitting and improve a model’s performance on test data. Overfitting means the model performs well on training data but poorly on test data because it has over-learned the noise and outliers in the training data and cannot generalize well to new, unseen data.

The core idea of regularization is to introduce an additional penalty term in the loss function to prevent the model from becoming too complex. This penalty term is usually related to the model’s weight parameters and imposes a penalty on models with large parameter values, encouraging the model’s weights to be smaller or zero for some features. This helps the model not to overly rely on certain features or become too complex, enhancing its ability to generalize to new data.

This method can be intuitively understood. If a predictive model is too complex and considers every minor detail, it might overfit some random noise in the training data and fail to make accurate predictions on new, unknown data. Regularization limits the model’s complexity, preventing it from fitting every minor detail in the training data, making it more likely to capture the true patterns and not focus excessively on the noise.

4.2 L1 and L2 Regularization

L1 and L2 regularization are two common methods. L1 regularization adds the absolute values of the parameters as a penalty term in the loss function, encouraging the use of fewer parameters, resulting in a sparse model, which is useful for feature selection. L2 regularization adds the sum of the squared parameters as a penalty term, encouraging smaller parameter values, thus preventing the model from overly relying on specific features in the training data.

4.2.1 L1 Regularization

L1 regularization, also known as Lasso regularization, involves adding the sum of the absolute values of the model parameters to the loss function. The formula is:

L1 regularization tends to drive the model parameters towards zero, resulting in a sparse model. This means some unimportant parameters become zero, achieving feature selection.

4.2.2 L2 Regularization

L2 regularization, also known as Ridge Regression or weight decay, involves adding the sum of the squared model parameters to the loss function. The formula is:

Unlike L1 regularization, L2 regularization does not force the parameters to zero but brings them closer to zero, resulting in a “smooth” model. L2 regularization prevents the model’s weights from becoming too large, reducing complexity and overfitting.

When choosing between L1 and L2 regularization, consider their characteristics and application scenarios. For a sparse model or feature selection, choose L1 regularization. To reduce model complexity and overfitting, choose L2 regularization. In practice, both can be used simultaneously, known as Elastic Net regularization.

4.3 Dropout Regularization

Dropout is an effective technique to prevent overfitting in neural networks, especially for large networks. The idea is simple: during training, randomly select a portion of neurons and “turn them off”. Specifically, in each training step, randomly select some neurons and set their output to zero. This simulates “network damage” during each step, making it like training a smaller network each time, constructing and training many different small networks.

This method is useful because it increases model robustness, requiring the model to learn how to make accurate predictions even when some neurons are suddenly “turned off.” Randomly turning off neurons also limits complex co-adaptations, preventing overfitting. Each neuron must work correctly in all possible circumstances, learning more useful and general features.

Dropout training is like training many small neural networks and averaging their predictions. Dropout can be seen as a concise ensemble learning technique, training many different models and combining their predictions. It provides a simple, computationally efficient way to approximate training and ensembling many network architectures.

Note that Dropout is only used during training. During evaluation or testing, Dropout is not used because the network should use all its capacity to make the best predictions. To compensate for dropped neurons during training, scale the outputs of remaining neurons. For example, if neurons are dropped with a 50% probability during training, halve the neuron outputs during testing.

4.4 Other Regularization Methods

Besides L1, L2, and Dropout, other regularization methods include:

- Early Stopping: Stop training when performance on the validation set no longer improves, preventing overfitting.

- Data Augmentation: Increase training data diversity by making changes (like rotation, scaling) to enhance generalization.

- Batch Normalization: Normalize data after each layer’s output to follow a standard normal distribution, speeding up training and providing regularization.

5. In-depth Understanding of Neural Network Optimization Setup

In deep learning, setting up optimization problems is crucial because a well-optimized model can significantly improve learning outcomes and efficiency in handling complex tasks. This section discusses important topics such as input data normalization, gradient vanishing and explosion, weight initialization in deep networks, numerical gradient approximation, and gradient checking.

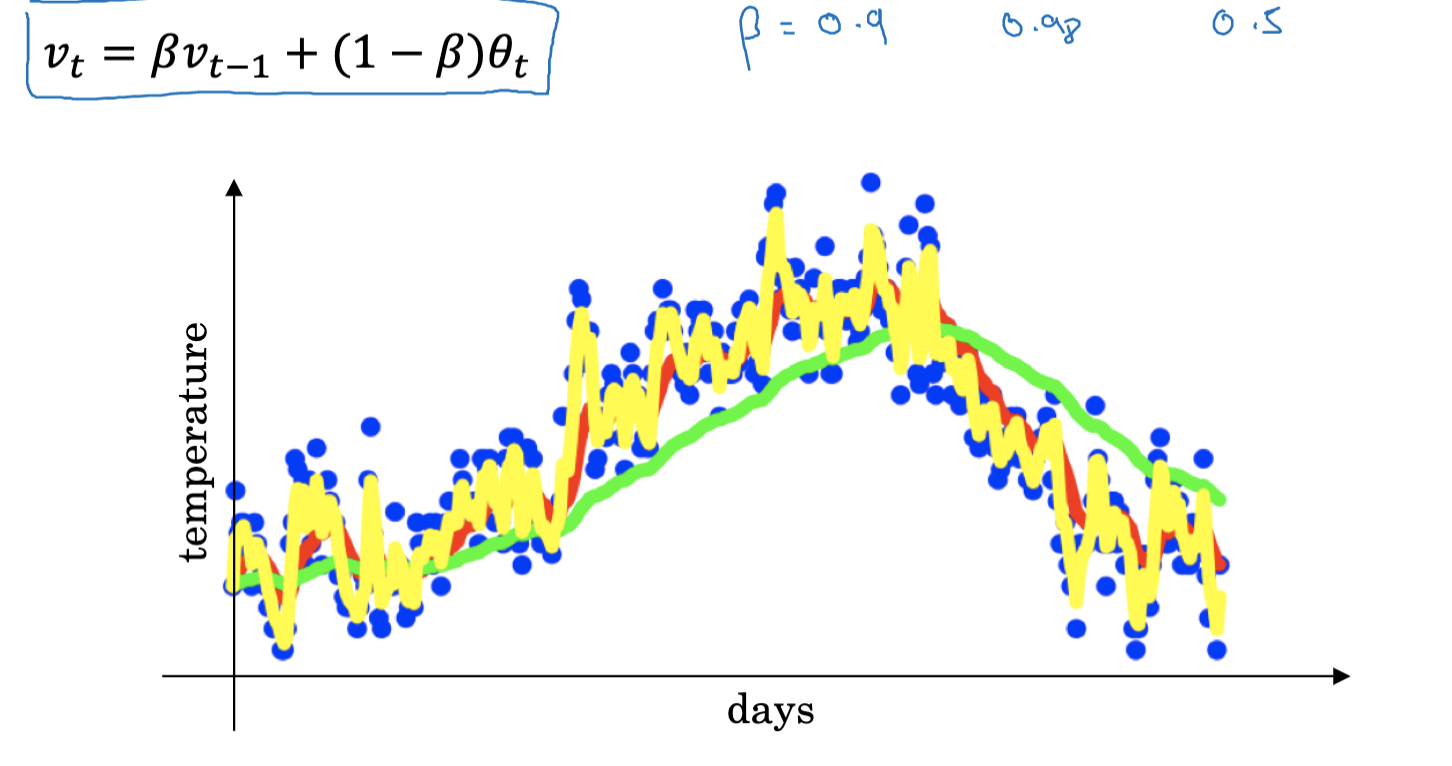

5.1 Normalizing Input Data

Before training neural network models, we typically preprocess the input data, with normalization being a common method. It stabilizes the training process and enhances model performance. The main idea is to scale the raw input data to have zero mean and unit variance.

Normalization involves two steps: subtracting the mean to center the data and dividing by the standard deviation to normalize the variance. For a feature

where

It is crucial to use the same normalization parameters for both training and test sets, meaning the test set should be normalized using the mean and standard deviation from the training set. This assumption is based on the premise that the test set and training set share the same data source.

5.2 Gradient Vanishing and Explosion Problems

When using gradient descent or its variants for optimization, we might encounter gradient vanishing or explosion issues, especially in deep neural networks.

- Gradient Vanishing: This problem occurs when gradients decrease exponentially as the network depth increases, making it hard to update the weights of deep layers. It can cause slow learning at the beginning or complete inability to learn.

- Gradient Explosion: Opposite to vanishing gradients, this issue arises when gradients increase exponentially, leading to excessively large weight updates and preventing the network from converging.

These issues are major challenges in training deep neural networks. A partial solution is to carefully select the weight initialization method.

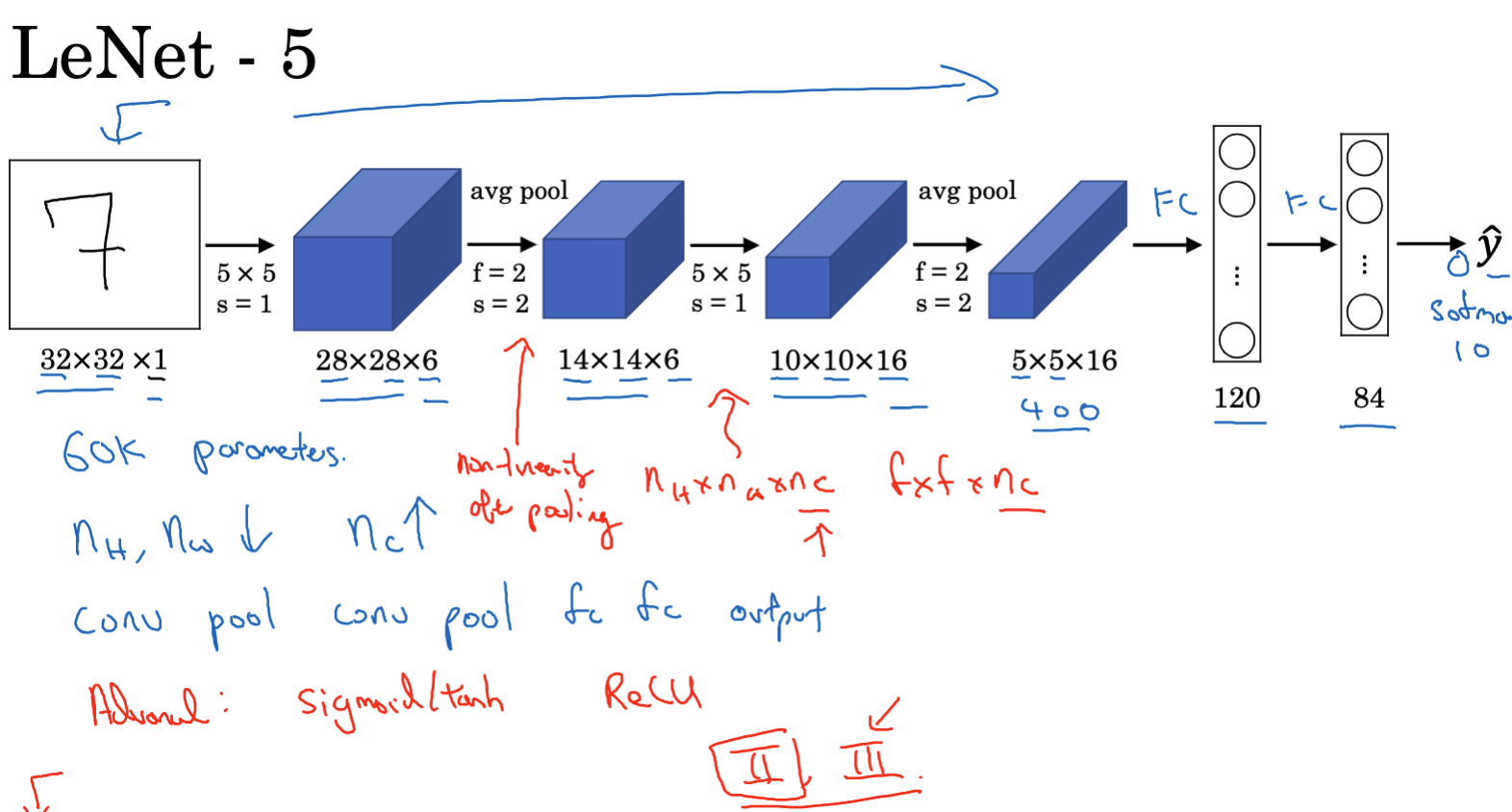

5.3 Weight Initialization in Deep Networks

Weight initialization significantly impacts the performance of deep neural networks. Good initialization can accelerate convergence and reduce the risk of poor local minima or gradient issues. Ideally, we want all neurons to be symmetric at the initial stage, so we use random initialization to break this symmetry.

If weights are too large, neurons’ activation functions (e.g., sigmoid, tanh) might saturate, causing gradient vanishing. If weights are too small, the signal might decay too quickly, leading to poor training performance.

Xavier initialization (also known as Glorot initialization) is effective for deep networks. Assuming a neuron has

For ReLU or its variants (like Leaky ReLU), He initialization is preferable, with weights drawn from a Gaussian distribution with mean 0 and variance

Proper weight initialization is crucial for training deep neural networks, helping models converge faster and reducing gradient-related issues.

5.4 Gradient Checking

During deep learning training, we compute the gradients of the loss function to optimize model parameters using gradient descent or its variants (e.g., Adam, RMSProp). This process involves complex backpropagation and chain rule differentiation, prone to errors. Gradient checking ensures our gradient calculations are correct.

The basic idea is to numerically approximate the gradient for each weight and compare it with the gradient from backpropagation. Mathematically, the derivative of a function

where

For each parameter

- It should only be used during debugging, not training, due to its slow computation.

- If the algorithm fails the gradient check, you need to inspect its components individually to find the issue.

- Include regularization terms in the gradient check if regularization is used.

- Gradient checking cannot be used with dropout. Set dropout to 1.0 (i.e., disable dropout) when performing gradient checks.

- Occasionally, backpropagation might be correct when weights and biases are near zero but less accurate as they grow. Run gradient checks after random initialization and again after some training.

In this blog, we delved into practical aspects of deep learning, including training/validation/test set division, bias-variance trade-off, regularization methods, and optimization setup. This article covers the first week of Course 2, with more content to follow in the second and third weeks. I hope to continue this journey.